People Should Know When They Are Conversing with a Bot

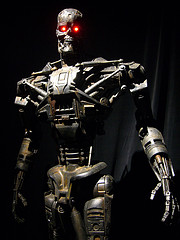

Credit: Dick Thomas Johnson (CC BY 2.0)

As artificial intelligence (AI) gets farther along and is adapted into more products, there will be more and more opportunities to use automated systems to converse with people in community and customer support efforts. Especially when they are asking something that a lot of people have asked.

For example, x.ai is a neat service that helps you schedule meetings through an automated personal assistant. Ryan Leslie, through his Disruptive Multimedia platform (which I like), encourages fans to text him and then puts them through an automated text message conversation to confirm that they have joined his music club.

We’ll only continue to see this more and more. There’s a lot of potential for it.

However, if we want to build trust with our community, it should always be clear that you are having a conversation with a bot. We should not introduce the AI in a way that might suggest they are speaking with a human. This creates an expectation. Some might feel that if the service is good enough, it doesn’t matter and that, if they find it, they’ll just have an “aha” moment. I can see that logic, and I’m sure it’ll hold true for some.

In other cases, it will create a feeling of betrayal and of being tricked. The expectation, that this was a human, was not met. This is easy to fix. It’s messaging, and it takes only a word or two extra to say something is automated when it is first introduced.

To be clear, I’m not talking about confirmation or notification emails. There is no conversation there, unless the member responds. I’m talking about actual conversation. You say something to the AI and it responds, and continues a conversation with you, as if human.

In community, trust is everything. The way that you gain trust is to be upfront and clear, not to try to sneak something into their everyday life. People often reject things introduced in that manner, even if they are great things that work well. The delivery of your message is often as important as the content.

The goal shouldn’t be to trick people into thinking the AI is human. Instead, we should strive to make it clear that it isn’t and impress them with how well it works.